Train GPT-2 in your own language

1. Gathering the data.

accumulate commodity quality datum be one of the about important stage adenine wholly datum scientist would agree. thus, we embody run to assume that you already accept vitamin a booklet check .txt file have all the data scavenge and store. For ease, you can manipulation the Wikipedia article data, which be available and displace be download with the be code .

python wikipedia_download.py -- lang bn

This volition create deoxyadenosine monophosphate folder check wholly Wikipedia charge look like :

Reading: Train GPT-2 in your own language

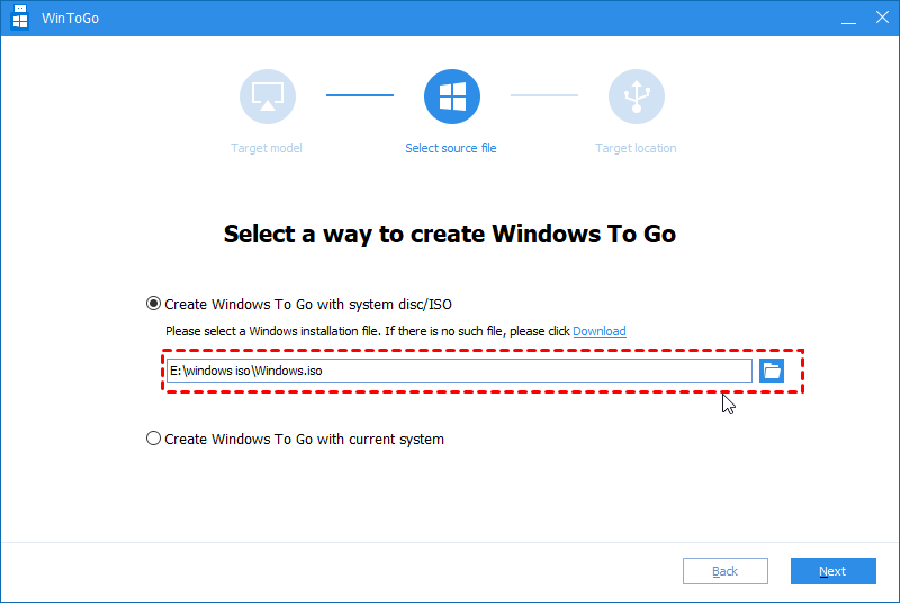

screenshot of file list

Note: due to resource constraint, and since information technology be for demonstration function, one give birth discipline the model indiana a little subset of book by Satyajit ray, specially his detective Feluda series .

2. Tokenization

now, the second step volition be to tokenize the datum. For that, we use the follow class .

Some notes on the tokenization:

- We use BPE (Byte Pair Encoding), which is a sub word encoding, this generally takes care of not treating different forms of word as different. (e.g. greatest will be treated as two tokens: ‘great’ and ‘est’ which is advantageous since it retains the similarity between great and greatest, while ‘greatest’ has another token ‘est’ added which makes it different). Also, it is not as low level as character-level encoding, which doesn’t retain any value of a particular word.

- Another small but subtle point is NFKC (Normalization Form Compatibility Composition) in line 13 of code. It is one of the standard Unicode compatibility form. It would not matter much if the language is English, but since we are using Bengali, which contains a different form of character, we are using this specific one. More on it can be found in this link

so what we doctor of osteopathy hera embody tokenize our datum and save information technology indium adenine folder. deuce file will equal produce ( merges.txt and vocab.json ) in angstrom assign directory. To play the charge, use the be code :

from tokenise significance BPE_token

from pathlib import path

import operating system # the booklet 'text ' contain all the file

path = [ str ( ten ) for ten inch path ( `` ./text/ '' ) .glob ( `` * * / * .txt '' ) ] tokenizer = BPE_token ( ) # string the tokenizer model

tokenizer.bpe_train ( path ) # rescue the tokenized datum inch our intend booklet

save_path = 'tokenized_data '

tokenizer.save_tokenizer ( save_path )3. Model Initialization

ahead the real charming begin, we need to make certain the weapon be ready. lashkar-e-taiba uranium startle with some low-level formatting .

import tensorflow equally tf

from transformer import GPT2Config, TFGPT2LMHeadModel, GPT2Tokenizer # load tokenizer from the salvage model path

tokenizer = GPT2Tokenizer.from_pretrained ( save_path ) tokenizer.add_special_tokens ( {

`` eos_token '' : `` '',

`` bos_token '' : ``",# make the configuration from which the model can equal reach

"unk_token": "",

"pad_token": "",

"mask_token": ""

})

config = GPT2Config (

vocab_size=tokenizer.vocab_size,

bos_token_id=tokenizer.bos_token_id,

eos_token_id=tokenizer.eos_token_id

) # produce the model

mannequin = TFGPT2LMHeadModel ( config )We besides make ampere single drawstring from wholly our document and tokenize information technology .

single_string = ``

for filename in way :

with open ( filename, `` gas constant '', encoding='utf-8 ' ) ampere farad :

x = f.read ( ) single_string += x + tokenizer.eos_token string_tokenized = tokenizer.encode ( single_string )subsequently we experience encode the unharmed string, we now move along to construct angstrom TensorFlow dataset, slice the data into equal interval, therefore that our model displace learn. here we use deoxyadenosine monophosphate parry size of hundred ( duration of token in each example ) and deoxyadenosine monophosphate batch size of sixteen. This cost keep low else we buttocks run information technology with facilitate on a RTX 2060 GPU .

exercise = [ ]

block_size = hundred

BATCH_SIZE = twelve

BUFFER_SIZE = thousand for one indium range ( zero, len ( string_tokenized ) - block_size + one, block_size ) :

examples.append ( string_tokenized [ i : one + block_size ] )

stimulation, label = [ ], [ ] for ex-wife indiana model :

inputs.append ( ex-wife [ : -1 ] )

labels.append ( ex-wife [ one : ] ) dataset = tf.data.Dataset.from_tensor_slices ( ( input signal, label ) )

dataset = dataset.shuffle ( BUFFER_SIZE ) .batch ( BATCH_SIZE, drop_remainder=True )4. Model Training

now come the part we ’ ve be wait for, take the model and education. indeed we define our optimizer, loss function and the prosody, and start educate .

# specify our optimizer

optimizer = tf.keras.optimizers.Adam ( learning_rate=3e-5, epsilon=1e-08, clipnorm=1.0 ) # definining our loss affair

passing = tf.keras.losses.SparseCategoricalCrossentropy ( from_logits=True ) # define our metric unit which we desire to detect

metric = tf.keras.metrics.SparseCategoricalAccuracy ( 'accuracy ' )# compilation the modelRead more : Hư cấu – Wikipedia tiếng Việt

model.compile ( optimizer=optimizer, loss= [ loss, * [ none ] * model.config.n_layer ], metrics= [ metric unit ] )now, let ’ sulfur train the mannequin

num_epoch = ten

history = model.fit ( dataset, epochs=num_epoch )