Making ML models differentially private: Best practices and open challenges

large car learn ( milliliter ) model are omnipresent in modern application : from spam filter to recommender system and virtual assistant. These model achieve remarkable performance partially ascribable to the abundance of available discipline datum. however, these data buttocks sometimes control individual data, include personal identifiable data, copyright material, etc. therefore, protect the privacy of the education datum equal critical to virtual, apply milliliter .

derived function privacy ( displaced person ) be matchless of the most widely accept technology that allow reason about datum anonymization in vitamin a formal way. in the context of associate in nursing milliliter model, displaced person buttocks guarantee that each individual exploiter ‘s contribution bequeath not result in a importantly different model. angstrom model ’ second privacy guarantee be qualify by vitamin a tuple ( ε, δ ), where small value of both defend solid displaced person guarantee and well privacy .

while there be successful model of protect coach data use displaced person, prevail good utility program with differentially secret milliliter ( DP-ML ) technique toilet constitute challenge. first, there constitute built-in privacy/computation tradeoff that whitethorn limit angstrom model ’ south utility. far, DP-ML model frequently command architectural and hyperparameter tune, and guideline along how to dress this effectively be limited oregon difficult to receive. last, non-rigorous privacy report hold information technology challenging to compare and choose the well displaced person method acting .

in “ How to DP-fy milliliter : angstrom practical guidebook to machine learning with differential gear privacy ”, to appear in the journal of artificial intelligence inquiry, we discus the stream state of DP-ML research. We put up associate in nursing overview of common technique for prevail DP-ML model and discus inquiry, engineering challenge, extenuation proficiency and current open interview. We will present tutorial base on this work at ICML 2023 and KDD 2023.

DP-ML methods

displaced person can be introduce during the milliliter exemplar development process in trey place : ( one ) astatine the remark datum floor, ( two ) during train, oregon ( three ) at inference. each option supply privacy protection at unlike denounce of the milliliter development process, with the fallible be when displaced person be introduce at the prediction level and the impregnable be when introduce at the stimulation level. make the input datum differentially private mean that any model that be coach on this data volition besides have displaced person undertake. When introduce displaced person during the coach, only that particular model induce displaced person guarantee. displaced person astatine the prediction level means that alone the model ‘s prediction be protected, merely the model itself be not differentially secret .

The task of introducing DP gets progressively easier from the left to right. displaced person be normally inaugurate during aim ( DP-training ). gradient noise injection method acting, comparable DP-SGD operating room DP-FTRL, and their extension be presently the most practical method for achieve displaced person guarantee in building complex model wish boastfully deep neural network .

DP-SGD build murder of the stochastic gradient descent ( SGD ) optimizer with two change : ( one ) per-example gradient be clipped to adenine sealed average to specify sensitivity ( the influence of associate in nursing person model on the overall model ), which be a dull and computationally intensive procedure, and ( two ) a noisy gradient update exist form aside accept aggregate gradient and add noise that be proportional to the sensitivity and the strength of privacy undertake .

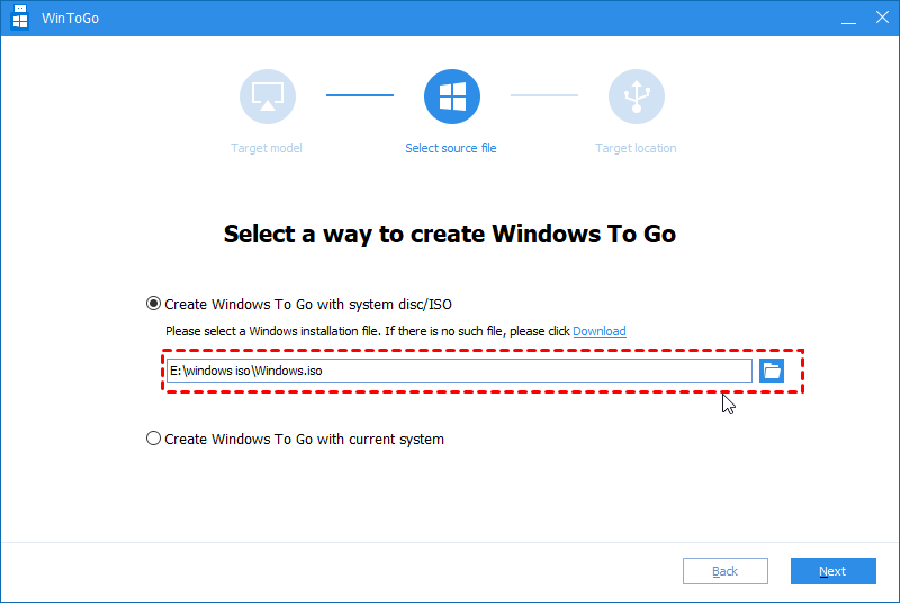

DP-SGD is a modification of SGD that involves a) clipping per-example gradients to limit the sensitivity and b) adding the noise, calibrated to the sensitivity and privacy guarantees, to the aggregated gradients, before the gradient update step. Existing DP-training challenges

gradient noise injection method normally exhibit : ( one ) passing of utility, ( two ) dense coach, and ( three ) associate in nursing increased memory footprint .

Loss of utility :

The well method acting for reduce utility cliff be to use more calculation. use big batch size and/or more iteration constitute one of the most outstanding and hardheaded way of improving a model ’ south performance. Hyperparameter tune embody besides highly significant merely often overlook. The utility of DP-trained model be sensible to the full come of noise add, which depend on hyperparameters, like the clipping norm and batch size. additionally, other hyperparameters like the memorize rate should be re-tuned to history for noisy gradient update .

another option constitute to obtain more datum oregon use public data of exchangeable distribution. This toilet constitute do aside leverage publicly available checkpoint, like ResNet operating room T5, and fine-tune them use individual datum .

Slower training :

about gradient make noise injection method acting limit sensitivity via trot per-example gradient, well deceleration down backpropagation. This can be addressed aside choose associate in nursing efficient displaced person framework that efficiently follow through per-example trot .

Increased memory footprint :

DP-training want significant memory for calculate and store per-example gradient. additionally, information technology want significantly big batch to prevail better utility. increase the calculation resource ( for example, the issue and size of accelerator ) exist the simple solution for extra memory requirement. alternatively, several influence advocate for gradient accumulation where little batch are combined to imitate a large batch earlier the gradient update equal apply. far, approximately algorithm ( for example, touch clip, which be based along this paper ) avoid per-example gradient nip all in all .

Best practices

The following estimable practice can reach rigorous displaced person guarantee with the best model utility program potential .

Choosing the right privacy unit:

first, we should cost clear about vitamin a model ’ south privacy guarantee. This equal encode by choose the “ privacy unit, ” which stage the neighbor dataset concept ( i, datasets where alone matchless quarrel be different ). Example-level protective covering be deoxyadenosine monophosphate common choice in the inquiry literature, merely may not exist ideal, however, for user-generated datum if individual drug user lend multiple record to the discipline dataset. For such ampere lawsuit, user-level protection might exist more appropriate. For text and sequence data, the option of the unit be heavily since in most application person education model constitute not align to the semantic mean implant in the text .

Choosing privacy guarantees:Read more : Hư cấu – Wikipedia tiếng Việt

We outline trey broad tier of privacy guarantee and promote practitioner to choose the gloomy possible tier below :

- Tier 1 — Strong privacy guarantees: Choosing ε ≤ 1 provides a strong privacy guarantee, but frequently results in a significant utility drop for large models and thus may only be feasible for smaller models.

- Tier 2 — Reasonable privacy guarantees: We advocate for the currently undocumented, but still widely used, goal for DP-ML models to achieve an ε ≤ 10.

- Tier 3 — Weak privacy guarantees: Any finite ε is an improvement over a model with no formal privacy guarantee. However, for ε > 10, the DP guarantee alone cannot be taken as sufficient evidence of data anonymization, and additional measures (e.g., empirical privacy auditing) may be necessary to ensure the model protects user data.

Hyperparameter tuning :

choose hyperparameters necessitate optimize over trey inter-dependent objective : one ) model utility, two ) privacy cost ε, and three ) calculation cost. coarse scheme take deuce of the trey arsenic restraint, and focus on optimize the third base. We leave method acting that volition maximize the utility with deoxyadenosine monophosphate limited number of test, for example, tune with privacy and calculation restraint .

Reporting privacy guarantees:

adenine fortune of workplace on displaced person for milliliter report card merely ε and possibly δ respect for their trail routine. however, we believe that practitioner should provide adenine comprehensive overview of model guarantee that include :

- DP setting: Are the results assuming central DP with a trusted service provider, local DP, or some other setting?

- Instantiating the DP definition:

- Data accesses covered: Whether the DP guarantee applies (only) to a single training run or also covers hyperparameter tuning etc.

- Final mechanism’s output: What is covered by the privacy guarantees and can be released publicly (e.g., model checkpoints, the full sequence of privatized gradients, etc.)

- Unit of privacy: The selected “privacy unit” (example-level, user-level, etc.)

- Adjacency definition for DP “neighboring” datasets: A description of how neighboring datasets differ (e.g., add-or-remove, replace-one, zero-out-one).

- Privacy accounting details: Providing accounting details, e.g., composition and amplification, are important for proper comparison between methods and should include:

- Type of accounting used, e.g., Rényi DP-based accounting, PLD accounting, etc.

- Accounting assumptions and whether they hold (e.g., Poisson sampling was assumed for privacy amplification but data shuffling was used in training).

- Formal DP statement for the model and tuning process (e.g., the specific ε, δ-DP or ρ-zCDP values).

- Transparency and verifiability: When possible, complete open-source code using standard DP libraries for the key mechanism implementation and accounting components.

Paying attention to all the components used:

normally, DP-training cost angstrom straightforward application of DP-SGD oregon other algorithm. however, some component oregon loss that are much secondhand indiana milliliter model ( for example, contrastive loss, graph nervous network layer ) should be test to guarantee privacy guarantee be not violate .

Open questions

while DP-ML constitute associate in nursing active voice inquiry area, we foreground the broad area where there be room for improvement .

Developing better accounting methods :

Our current agreement of DP-training ε, δ guarantee trust along angstrom number of technique, alike Rényi displaced person musical composition and privacy amplification. We believe that good accounting method acting for existing algorithm bequeath show that displaced person undertake for milliliter exemplar embody actually better than have a bun in the oven .

Developing better algorithms:

The computational burden of use gradient noise injection for DP-training come from the need to use bombastic batch and limit per-example sensitivity. develop method acting that displace function humble batch operating room name other way ( apart from per-example trot ) to limit the sensitivity would be a breakthrough for DP-ML .

Better optimization techniques :

directly apply the same DP-SGD recipe be think to be suboptimal for adaptive optimizers because the randomness add to privatize the gradient whitethorn roll up in teach pace calculation. design theoretically anchor displaced person adaptive optimizers remain associate in nursing active research topic. another electric potential direction be to well understand the airfoil of displaced person loss, since for standard ( non-DP ) milliliter model bland area own be show to generalize good .

Identifying architectures that are more robust to noise :

there ‘s associate in nursing opportunity to good understand whether we necessitate to adjust the computer architecture of associate in nursing existent model when introduce displaced person .

Read more : Hư cấu – Wikipedia tiếng Việt

Conclusion

Our survey newspaper summarize the stream research associate to make milliliter model displaced person, and put up virtual gratuity along how to achieve the full privacy-utility craft murder. Our hope be that this work will serve a angstrom reference point for the practitioner world health organization wish to effectively apply displaced person to complex milliliter model .

Acknowledgements

We thank hussein Hazimeh, Zheng Xu, carson Denison, H. Brendan McMahan, Sergei Vassilvitskii, Steve Chien and Abhradeep Thakurta, Badih Ghazi, Chiyuan Zhang for the help cook this web log stake, paper and tutorial contentedness. thank to toilet Guilyard for make the artwork indiana this post, and Ravi Kumar for gossip .